Let's talk spreadsheets and data. If you've been thinking about applying to college for any amount of time, you'll have come across college rankings. And not just your garden variety 'Top 100 Colleges' lists either—they've gotten increasingly niche. There are the 'Top 25 Hidden Ivies,' 'Top 50 Small Liberal Arts Colleges,' and recently, I even came across 'The 50 Most Underrated Colleges & Universities.'

After reviewing how five major ranking systems weighted their 2025 lists, I noticed a pattern that should concern every family: the factors students actually experience daily—class access, research opportunities, teaching quality—barely register in these methodologies. Here's what's really driving these rankings, and why you need a different approach.

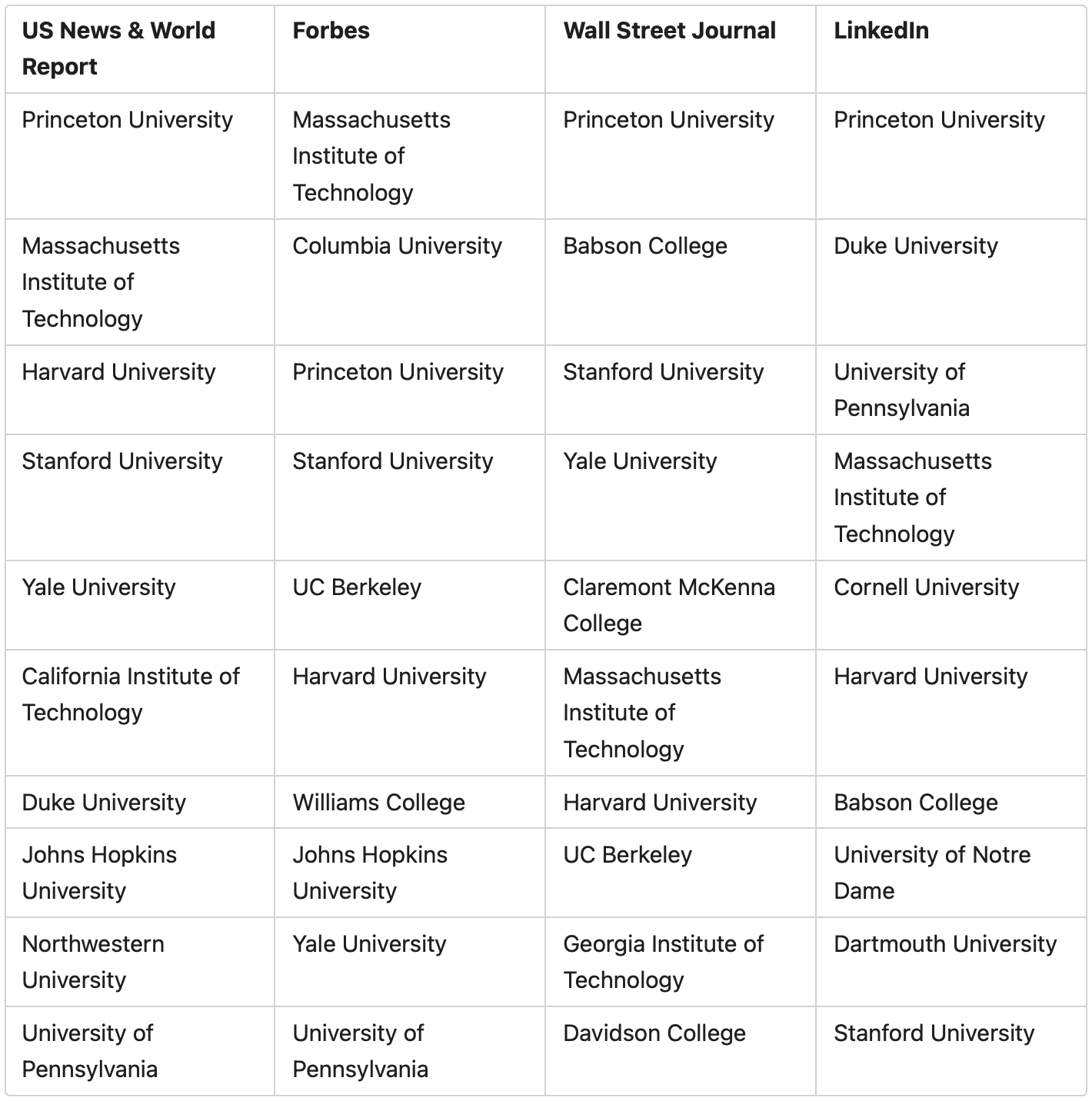

US News & World Report is the granddaddy of all the rankings, but hot on their heels are Forbes, The Wall Street Journal and Money. LinkedIn has even entered the fray as of this year. So let's take a look at each of their 'Top 20 Colleges' lists:

Some of the usual suspects and some surprising entries. But my next question, and the one I want to really spend time on here, is this: What goes into each weighting strategy?

So, what goes into each weighting strategy? Each ranking system reveals its true values through how it prioritizes different factors:

US News recently made a large methodology shift that reveals how arbitrary these weightings really are. To emphasize social mobility, they eliminated five data points entirely:

Money Magazine is, unsurprisingly, laser focused on affordability and outcomes:

30%: Quality of education

40%: Affordability

30% Outcomes

Forbes emphasizes return on investment and career outcomes:

The Wall Street Journal's methodology, at a high level, is:

LinkedIn, a new entry into the rankings game, puts a lot of emphasis on career outcomes:

Here's the pattern I see across all these rankings: factors that my students care about deeply barely show up.

The student experience—things like ability to get the classes you need, access to research opportunities—barely shows up in the weighting strategy. Factors that might influence the academic environment—things like class size and who's teaching your student—aren't given much, if any, weight, depending on the ranking.

Here's what the numbers tell us: The WSJ weights "learning environment" at 20%. US News only weights "classroom factors" at about 6-8%. These rankings measure what's easy to quantify (spending, ratios, outcomes) rather than what students actually experience in classrooms or on campus day-to-day.

Here's what this means for multi-passionate students specifically: This ranking approach is blind to what they need most. When rankings prioritize narrow metrics like starting salaries by major, they can't capture schools that excel at interdisciplinary learning or support students who want to combine fields in innovative ways. A school that's brilliant at helping students design double majors, explore multiple interests, or create independent studies gets no ranking boost for this flexibility.

I'm seeing this pattern play out in real time: As political winds have shifted and society has focused more on access, these rankings have moved away from valuing education-related factors like class size toward social mobility metrics. This is why big state R1 institutions like UCLA and UCSD have climbed the rankings significantly. They're no longer being penalized for large class sizes or graduate students teaching—factors that directly impact the learning experience.

It depends on whether you can find a ranking that weights factors in a way that reflects your family's values. In my experience working with families, I've found that many things that matter most to my students simply don't show up in these rankings. Of course they care about career outcomes, but not exclusively. Most care deeply about having a rich, engaging academic and social experience.

Here's what I recommend instead: create your own rankings. This is the approach I use with families, and it works consistently better than trying to decode someone else's priorities:

Start with clarifying what matters. Think about:

Here's the method that works well: create a spreadsheet with whatever columns matter to you. Add one column for selectivity (Likely, Target, Reach, High Reach). Then add another for 'Overall Fit Assessment’. I’ve used a 1-5 rating system there.

For each factor you care about, decide its relative importance. Here's a pattern I see: students passionate about research access weight "undergraduate research opportunities" heavily, while those focused on specific career outcomes prioritize "industry connections." Both approaches work—the key is intentional prioritization.

As you research each college, assess it against your criteria. Here's what I want you to look for:

This process reveals patterns you wouldn't see otherwise and might also help you to reevaluate some of your criteria.

Once you've completed your research, sort your list by selectivity and then fit assessment. Here's what you'll have: a balanced college list with your best fits rising to the top of each selectivity band.

Your priorities will likely shift as you learn more about colleges and your student's interests evolve. This is exactly why designing your own system works better than accepting someone else's formula—it adapts as you do. And it’s why using my weighted decision template below might be a real timesaver. You can tweak your weighting strategy as you go, and your list auto-sorts.

Here's a Google Sheets template with some columns preconfigured to get you started. Make your own copy in order to use it.

Now, if you want to get a little fancier, you've come to the right place. I've created a tool that generates a weighted spreadsheet for you, with the weights entirely defined by you.

Here's how it works: you create a column, define the values and give each value a weight. For example, a column called 'Setting' with values 'Urban,' 'Suburban,' 'College Town,' and 'Rural'. You weight them from 4 to 1 because you prefer a city campus. Continue adding columns to which you want to apply internal weights until you're done and then hit 'Generate'. What you'll have is an empty spreadsheet template containing your weighted columns. At this point you can go ahead and add unweighted columns, e.g., 'Website' or 'Address' which you might want for informational purposes. As you input the actual raw data, your spreadsheet will auto-sort by both selectivity and weighting.

Rankings measure what's easy to quantify, not what creates transformative college experiences. For multi-passionate students especially, the most important factors—intellectual flexibility, interdisciplinary opportunities, and supportive exploration—don't appear in any ranking formula.

Here's what I recommend: instead of letting US News or Forbes define what matters, design your own system around your student's actual needs. The schools that rise to the top of your custom ranking will be the ones where your student is most likely to thrive, regardless of where they appear on someone else's list.